Original content can help in getting the Desire Rank

To get the desired SEO rank for a website, a developer or a website owner has to face the reality of dealing with duplicate content, which can indeed be a daunting task. Hence, they should always check for plagiarism before publishing on the site and finding the cheapest website builder.

Content Marketers And SEO Professionals Should Value Original Content

The reason for this is that there are many developers and content management systems in the market that works great from displaying the content point of view but have little knowledge of how the content functions when it is looked at from a search engine perspective.

It is made worse as there are several free duplicate content checkers for SEO, available online, do not always show the correct results, that is to say, the seemingly original content still has traces of plagiarism in it.

2 kinds of copied content on a web page create a problem:

- First Kind—Onsite duplication

This kind of duplication occurs when similar contents are duplicated on more than 2 unique URLs of a site. This problem is usually handled by the site admin and the website development team.

- Second Kind—Offsite duplication

This indicates the publication of the same content in 2 or more websites. Although they cannot be controlled directly, working with third-parties and the owner of the offending website can be a way of doing away with such issues.

Unique and Original Content Attract More Visitors

Why is copied content a problem?

The best way to understand this would be first to understand why original content is excellent. One of the best ways to separate a specific website from that of others would be to possess original or unique content.

It indicates that the website has something new and exclusive to offer that other websites cannot, as they are not devoid of similar content.

Moreover, unique content is generally of high quality as the content goes through a process of screening via various online tools that eliminate any mistake which might remain in the content.

However, if one uses a similar content to describe their services and products, then that website does not stand apart from the rest.

Types of offsite duplicate content and their remedies:

- Know about the Content Scrapers and about the thieves

It happens when spammers or any other nefarious person gets hold of website content and uses it for their website.

Solutions –

- Writers or freelance writers should submit a copyright infringement report to Google.

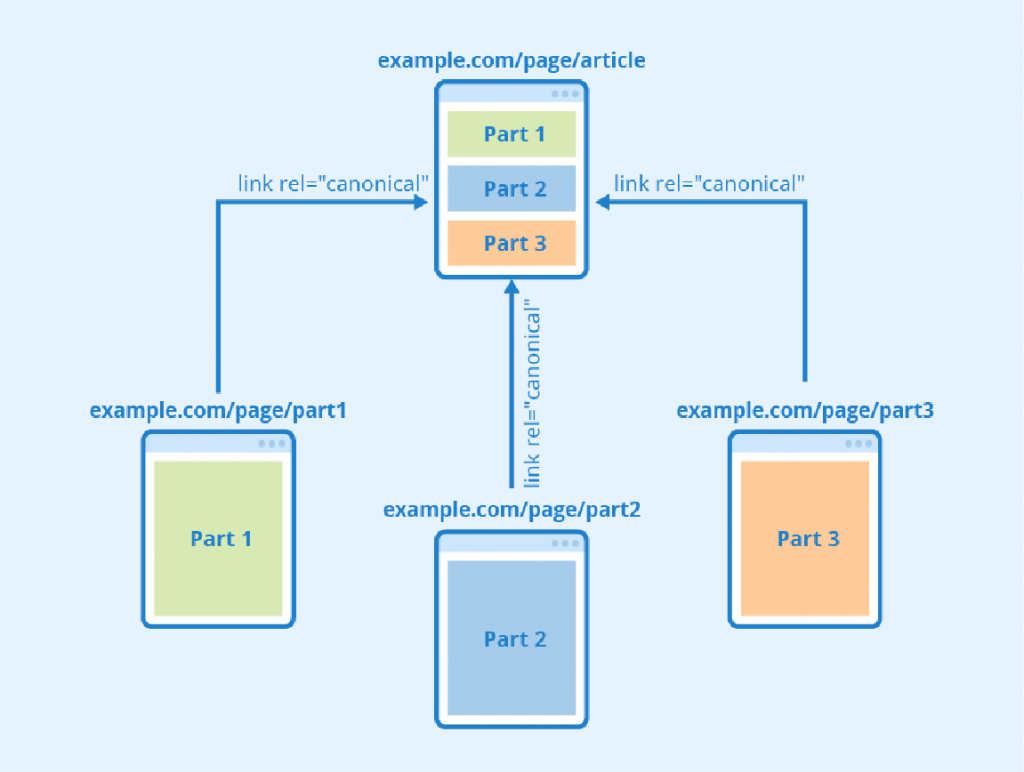

- The web designer should use the canonical tags back to the original web page.

Canonical Tags Can Save A Web Page From Duplication

- Article distribution

This type of content duplication is the result of publishing the same content to build links to a particular website.

Solution –

- Limit and control the number of duplicate versions, it can help the web page to avoid plagiarism and rank at the top of the search result page.

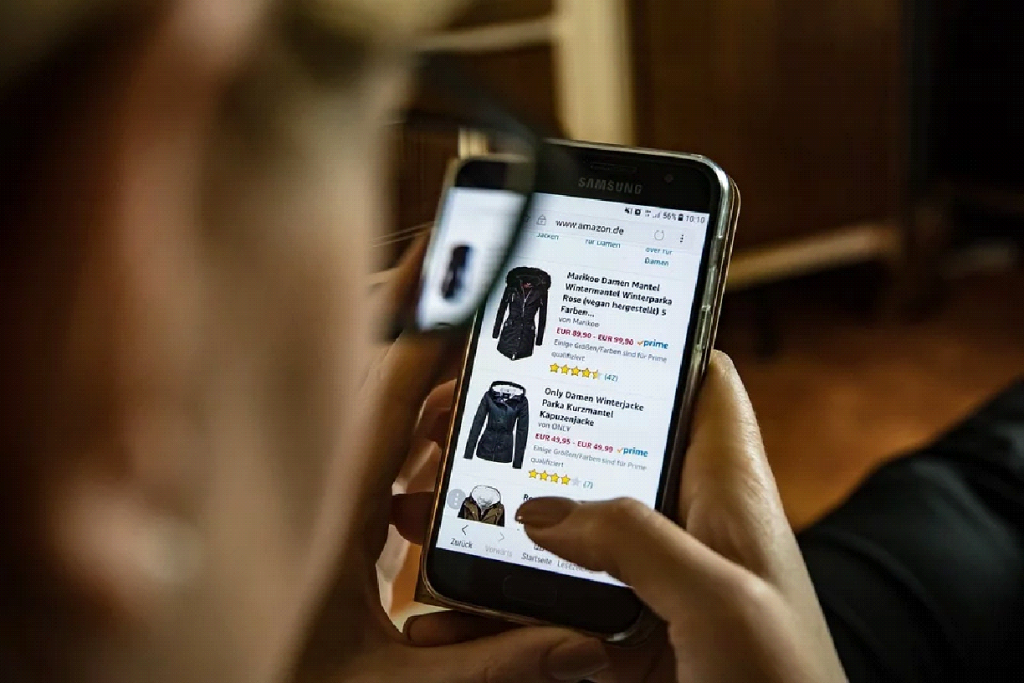

- Similar product descriptions

The use of similar content for product descriptions has recently become quite a common mistake.

Product Descriptions Should Go Through Online Plagiarism Checker

Solutions –

- Take the assistance of the excellent variety Plagiarism websites have available online.

- Generate more internal links for the website, as this is a signal that is picked up by search engines.

Types of onsite duplicate content:

- Product categorization duplication

It is caused by CMSs where a particular product is tagged multiple times.

Solution –

Only tag for a single category instead of going multiple times.

- Redundant URL duplication

It is a case where almost every web page of any particular website can be accessed by using a slightly different URL.

Solution –

The web designer should ensure that there are all alternate versions of the URLs redirect to the canonical or original URL.

- A/B test duplication and Ad landing page

Here the development of the multiple versions of the similar content takes place, either through the ad landing pages or for the A/B testing purposes.

Solution –

The web designers should use a noindex Meta tag for every page to keep them out of the SE’s index.

These were some of the ways that can help a web page owner to protect his/her website from the duplicate content issues.

Comments